Introduction

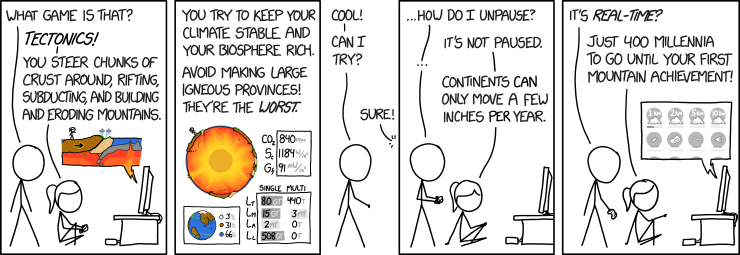

“Tectonics Game” (2012). XKCD. Available at: https://xkcd.com/2061/ (Accessed: 26th July 2023)

In a yet to be published post I’m going to discuss how I’m refactoring monsternames-api – see https://sudoblark.com/2023/07/24/monsternames-api/ – from a horrible, expensive (relatively), monolith into a lovely Serverless application. Said refactor inspired me to actually knuckle down and get some proper, modern, Infrastructure management in place for Sudoblark AWS. After all, I’ve setup Cloud infrastructures from scratch for many organisations why not my own?

So, in this article I’ll take you through how I setup Terraform from scratch to manage Sudoblark’s AWS. It’s relatively easy.

What is Terraform?

TL;DR: it’s infrastructure-as-code (IaC). Terraform lets you move out of the horrible old ways of manually provisioning things, and instead manage it all via code applying the usual source control paradigms. For the long version I’d recommend Terraform’s own website; read the docs.

The Goal

So what’s the goal here? What are our objectives? They’re as follows:

- Have a single repository in the Sudoblark GitHub to manage the entire account, later on simply instantiating other Sudoblark terraform modules for the creation of apps etc (see this article for the great mono vs micro repo debate)

- Store state remotely in S3

- Apply changes automatically on a merge to the main branch

- Demonstrate this, end-to-end, via the creation of a new S3 bucket.

In practice, that means the setup we are targeting is as follows:

So without further to do, let us get started.

The actual setup

Prerequisites

Before conducting the setup I had to sort out a few prerequisites. The instructions here are tested on MacOS, so if you’re using a different Operating System conduct your own research to figure out the equivalents.

- Install tfenv to manage different versions of terraform

git clone https://github.com/tfutils/tfenv.git ~/.tfenv

echo 'export PATH="$HOME/.tfenv/bin:$PATH"' >> ~/.bash_profile

- Install the AWSCLI

curl "https://awscli.amazonaws.com/awscli-exe-linux-x86_64.zip" -o "awscliv2.zip"

unzip awscliv2.zip

./aws/install

- Setup an appropriate profile block in my

~/.aws/configconfiguration using a secret access key for my IAM user (in large organisation you’ll probably want to use single-sign-on for this):

[profile sudoblark]

aws_access_key_id=<access key id>

aws_secret_access_key=<access key secret>

aws_default_region=<aws region>

- Manually create an S3 bucket to store the remote state file. Yes yes I know I said we want to manage everything via IaC, but this initial bootstrap step is unavoidable sadly.

With all that done, I was ready to go.

Terraform code

After creating, and then cloning, my lovely new empty terraform.aws repo I had my single source of truth for Sudoblark’s AWS infrastructure only… nothing was in there. So I set to work and in a matter of minutes had everything I needed:

% ls -la

total 24

drwxr-xr-x 9 bclark staff 288 Jul 26 21:33 .

drwxr-xr-x@ 48 bclark staff 1536 Jul 26 15:20 ..

drwxr-xr-x 14 bclark staff 448 Jul 26 21:33 .git

-rw-r--r-- 1 bclark staff 3939 Jul 26 21:03 .gitignore

drwxr-xr-x 10 bclark staff 320 Jul 26 21:26 .idea

-rw-r--r-- 1 bclark staff 217 Jul 26 21:03 LICENSE.txt

-rw-r--r-- 1 bclark staff 2720 Jul 26 21:03 README.md

drwxr-xr-x 3 bclark staff 96 Jul 26 21:05 docs

drwxr-xr-x 6 bclark staff 192 Jul 26 21:07 sudoblark

- docs just contains images and other bits of markdown for any documentation in the repo

- sudoblark is the name of the AWS account I’m managing

- the

.gitignoreis lifted and shifted from the wonderful folks at GitHub themselves: https://github.com/github/gitignore/blob/main/Terraform.gitignore - Given I’m a one-man shop (at the moment), we just place everything at the account as I don’t intend on increasing my costs any time soon

- For large organisations, you could use a folder structure to instead have multiple folders to represent multiple environments

- Or use one account per environment, that’s probably better if you can afford it

- Or use one repo per environment, see the aforementioned article for the great mono vs micro repo debate

- For large organisations, you could use a folder structure to instead have multiple folders to represent multiple environments

main.tfjust declares our state file and provider (my WordPress doesn’t syntax high terraform yet sadly) config to automatically use the AWSCLI profile we made earlier:

# Terraform configuration

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = "~> 3.0"

}

}

backend "s3" {

bucket = "terraform-sudoblark"

key = "environments/production/tfstate"

# Enable server side encryption for the state file

encrypt = true

region = "eu-west-2"

}

}

provider "aws" {

region = "eu-west-2"

profile = "sudoblark"

}

s3.tfjust declares a new S3 bucket we’ll use to test our pipeline later on:

resource "aws_s3_bucket" "bucket" {

bucket = "sudoblark-testing"

tags = {

"Environment" = "Prod",

"Service" = "Testing"

}

}

resource "aws_s3_bucket_acl" "acl" {

bucket = aws_s3_bucket.bucket.id

acl = "private"

}

And that’s it, we’re good to go:

% terraform plan

Terraform used the selected providers to generate the following execution

plan. Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

# aws_s3_bucket.bucket will be created

+ resource "aws_s3_bucket" "bucket" {

+ acceleration_status = (known after apply)

+ acl = "private"

+ arn = (known after apply)

+ bucket = "sudoblark-testing"

+ bucket_domain_name = (known after apply)

+ bucket_regional_domain_name = (known after apply)

+ force_destroy = false

+ hosted_zone_id = (known after apply)

+ id = (known after apply)

+ object_lock_enabled = (known after apply)

+ region = (known after apply)

+ request_payer = (known after apply)

+ tags = {

+ "Environment" = "Prod"

+ "Service" = "Testing"

}

+ tags_all = {

+ "Environment" = "Prod"

+ "Service" = "Testing"

}

+ website_domain = (known after apply)

+ website_endpoint = (known after apply)

+ object_lock_configuration {

+ object_lock_enabled = (known after apply)

+ rule {

+ default_retention {

+ days = (known after apply)

+ mode = (known after apply)

+ years = (known after apply)

}

}

}

+ server_side_encryption_configuration {

+ rule {

+ bucket_key_enabled = (known after apply)

+ apply_server_side_encryption_by_default {

+ kms_master_key_id = (known after apply)

+ sse_algorithm = (known after apply)

}

}

}

+ versioning {

+ enabled = (known after apply)

+ mfa_delete = (known after apply)

}

}

Plan: 1 to add, 0 to change, 0 to destroy.

CI/CD

Prerequisites, tflint and tfcost

For any effective CI/CD, we need to accurately define what we want. The discrete actions, based on the diagram provides in the setup section, we want to perform may thus be defined:

- Centrally control the terrafrom version so it’s the same across local development and CI/CD

- Run terraform init

- Run terraform validate

- Run terraform plan

- Run terraform apply

- Run tflint

- Run infracost

- Grant GitHub access to the Sudoblark AWS account

Thankfully, tfenv can use a .terraform-version tfenv file to automatically switch to the correct terraform version. So we place one of those in the sudoblark folder:

% cat sudoblark/.terraform-version

1.2.7%

Terraform versioning sorted, we setup a .tflint.hcl file to configure tflint with the defaults settings:

% cat sudoblark/.tflint.hcl

plugin "terraform" {

enabled = true

preset = "recommended"

}%

For Infracost we setup a new account, link the terraform.aws repository within the GUI and we’re good to go.

For granting access to the Sudoblark AWS account, we:

- Create a new IAM user with administrator access

- Generate an access key and store it somewhere very securely, preferably next to flammable material and a torch just in case

- Create new secrets in our organisation:

And with that we’re good to move on to the core terraform workflow.

Core terraform workflow

Now to move on to running terraform init/validate/plan/apply with GitHub actions. Afterall, we don’t want to have to manually run terraform plans and applies.

For this the setup is again rather simple:

% ls -la .github/workflows

total 8

drwxr-xr-x 4 bclark staff 128 Jul 26 21:37 .

drwxr-xr-x 3 bclark staff 96 Jul 26 21:33 ..

-rw-r--r-- 1 bclark staff 428 Jul 26 21:36 commit-to-pr.yaml

-rw-r--r-- 1 bclark staff 0 Jul 26 21:33 merge-to-main.yaml

GitHub actions will automatically detect workflows in a .github/workflows folder as per the docs. So creating the files is easy enough, but what about the content? Well that’s a bit more complicated, so I’ll explain it on a workflow-by-workflow basis. The only fundamentals you need to know beforehand are:

- A workflow has a trigger (or triggers) – defined by the

onkeyword – which determine when it runs. This can be in response to an event like a pull request, a manual execution, or even on a cron schedule - A workflow is made up of one or more jobs, which may have defined dependencies on one another

- A job is made up of one or more steps, which perform the actual actions part of GitHub Actions

- The GitHub Marketplace is a rich ecosystem, if you’ve thought of it odds are so has someone else and they’ve made a plugin step for it already

In fact that last point is key. I initially set-out to hand-craft a whole slew of commands, but after some research found that would have been a waste of time. The lovely dflook (checkout out their Github) has already done the heavy lifting for us: https://github.com/dflook/terraform-github-actions. This makes our workflow files stupidly simple:

commit-to-pr.yaml

name: Terraform checks on pull request

env:

TERRAFORM_PATH: sudoblark

AWS_ACCESS_KEY_ID: ${{ secrets.SUDOBLARK_AWS_ACCESS_KEY_ID }}

AWS_SECRET_ACCESS_KEY: ${{ secrets.SUDOBLARK_AWS_ACCESS_KEY_VALUE }}

AWS_DEFAULT_REGION: eu-west-2

# Automatically generated token unique to this repo per workflow execution

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

on: [pull_request]

jobs:

validation:

name: Terraform validate

runs-on: ubuntu-20.04

steps:

- uses: actions/checkout@v3

- name: terraform validate

uses: dflook/terraform-validate@v1

with:

path: ${{ env.TERRAFORM_PATH }}

linting:

name: Terraform lint

runs-on: ubuntu-20.04

steps:

- uses: actions/checkout@v3

- name: Install tflint

uses: terraform-linters/setup-tflint@v3

- name: Run tflint

run: tflint

plan:

name: Terraform plan

runs-on: ubuntu-20.04

needs: [validation, linting]

steps:

- uses: actions/checkout@v3

- id: install-aws-cli

uses: unfor19/install-aws-cli-action@v1

- name: terraform plan

uses: dflook/terraform-plan@v1

with:

path: ${{ env.TERRAFORM_PATH }}

merge-to-main.yaml

name: Terraform apply on merge to main

env:

TERRAFORM_PATH: sudoblark

AWS_ACCESS_KEY_ID: ${{ secrets.SUDOBLARK_AWS_ACCESS_KEY_ID }}

AWS_SECRET_ACCESS_KEY: ${{ secrets.SUDOBLARK_AWS_ACCESS_KEY_VALUE }}

AWS_DEFAULT_REGION: eu-west-2

# Automatically generated token unique to this repo per workflow execution

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

on:

push:

branches:

- main

permissions:

contents: read

pull-requests: write

jobs:

apply:

name: Terraform apply

runs-on: ubuntu-20.04

steps:

- name: Checkout

uses: actions/checkout@v3

- name: terraform apply

uses: dflook/terraform-apply@v1

with:

path: ${{ env.TERRAFORM_PATH }}

auto_approve: true

The Result

Our initial PR to create the test bucket looks pretty nice:

And the the merged commit successfully applies!

Conclusion

So that’s it, we’ve managed to successfully:

- Have a single repository in the Sudoblark GitHub to manage the entire AWS account

- Run terrafrom validate, tflint, infracost and terraform plan on a pull request to the main branch via GitHub Actions

- Surface results to the above to the pull request in a readable format

- Apply changes automatically on a merge to the main branch

- Demonstrate this, end-to-end, via the creation of a new S3 bucket

I hope you’ve found this article somewhat enjoyable, not too much of a bore to read and have either learned something or been inspired to do a thing. I certainly plan on doing things with this new setup to provision many Sudoblark things.