Written 05 September 2025 ~ 10 min read

Database interoperability in Python utilising the Repository Enterprise Pattern

Benjamin Clark

Part 3 of 3 in Software Odds and Ends

Introduction

Recently, I’ve been knee-deep in Python. Leave me in a dark room with a tea, an IDE, and a programming problem and I’m happy so it’s been quite enjoyable.

One interesting use-case I had recently was refactoring an existing application of mine to lay the groundwork for a future migration from one database to another. Namely, from NoSQL (DynamoDB) to SQL (Postgres or MySQL, still up in the air about it).

The setup originally used the Python dictionary dispatch pattern (which I’ve written about here), but that seemed a little immature as I hacked away so I sat down and thought… there must be a better way to dynamically build in multiple database backends and still have code that will make sense in 6 months.

Turns out there was, so I figured a little write-up would be of interest.

The goal

Within this blog post, I aim to:

- Describe the “Repository Enterprise pattern”

- Show how this may be used to dynamically determine the backend for data persistence

- Link to a basic Typer application which demonstrates these principles

Prerequisites

I’m assuming you’re pretty familiar with Python, object-oriented programming (OOP), NoSQL and SQL

If not, well they’re pretty advanced topics I’d do a disservice to if I tried to explain here. But I’ve linked a few “getting started” guides below:

Python

- The Python Tutorial (official docs)

- “Classes” (official docs)

- Python documentation index / Beginner’s Guide

SQL (relational)

- PostgreSQL Tutorial (official docs)

- SQLBolt — interactive SQL lessons

- Python

sqlite3module (official docs) - SQLite documentation index

NoSQL

- MongoDB — Getting Started (official docs)

- Amazon DynamoDB — Getting Started (official docs)

- Martin Fowler — Introduction to NoSQL

You’ll also need Python 3.10 on your system to follow along and play around with the demo Typer application at the end.

The Repository Enterprise Pattern

Introduction

This pattern is well-documented, and written, by Martin Fowler in Patterns of Enterprise Architecture.

However, for this post I’m leaning heavily on Microsoft’s interpretation here, as my order for the above Fowler book is still being shipped (but when it arrives I will sit down with a tea and give it a good read).

The core concerns of this pattern are simply:

- Keeping the modelling of the problem-domain seperate from persistence

The idea is that a Repository interface acts as a domain-facing abstraction between objects in the domain, and the actual data layer, allowing you to

decouple representation of domain objects from the logic required to persist them. This centralises things like invariants and other such business logic

in one easy to maintain place.

Worked Example

Problem Domain

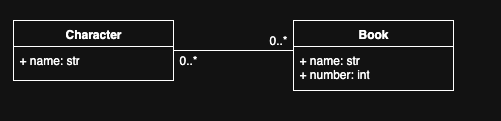

For example, let’s say your problem-domain is the Discworld Series of books and you’re trying to model what character(s) appear in what book(s).

Your domain-model layer may look as follows:

Which may be implemented with simple dataclasses:

from dataclasses import dataclass

@dataclass

class Book:

name: str

number: int

@dataclass

class Character:

name: str

@dataclass

class CharacterToBookMapping:

book: Book

character: CharacterRepositories

Base Abstracts

Yes, I could have used protocols instead. And, coming from C#, when I learned about Protocols they actually made more sense to me than abstracts with their weird and wonderful ability to mix abstract interfaces with concrete methods.

But, in this example at least, I felt that ABC inheritance was better. Of course, if this was instead a guide on designing SDKs with a plugin architecture then I think protocols would allow the typecasting flexibility required for that use case.

Each of these classes in the problem-domain then implement a base abstract to define a known interface, and then concrete implementations for each persistence layer you expect to interact with. For example, we can use an Enum to define a common return for all repositories and define base abstracts.

from __future__ import annotations

from abc import ABC, abstractmethod

from enum import Enum, unique

from discworld_cli.domain.models import Book, Character

@unique

class RepositoryResult(str, Enum):

SUCCESS = "success"

FAILED = "failed"

class BookRepository(ABC):

@abstractmethod

def record(self, book: Book) -> RepositoryResult: ...

class CharacterRepository(ABC):

@abstractmethod

def record(self, character: Character) -> RepositoryResult: ...

class CharacterToBookMappingRepository(ABC):

@abstractmethod

def record(self, book: Book, character: Character) -> RepositoryResult: ...Concrete Implementation for JSON

With the base abstract interfaces defined, we may then define a concrete implementation for persisting objects to JSON.

First, we set up some helpers:

from __future__ import annotations

from dataclasses import dataclass

from pathlib import Path

from typing import Any

import json

import tempfile

import os

from os import PathLike

from discworld_cli.domain.repositories import (

BookRepository,

CharacterRepository,

CharacterToBookMappingRepository,

RepositoryResult,

)

from discworld_cli.domain.models import Book, Character

Pathish = str | PathLike[str]

def _read_list(path: Path) -> list[dict[str, Any]]:

if not path.exists() or path.stat().st_size == 0:

return []

try:

data = json.loads(path.read_text(encoding="utf-8"))

return data if isinstance(data, list) else []

except json.JSONDecodeError:

return []

def _atomic_write_text(path: Path, text: str) -> None:

path.parent.mkdir(parents=True, exist_ok=True)

with tempfile.NamedTemporaryFile(

"w", delete=False, dir=path.parent, encoding="utf-8"

) as tmp:

tmp.write(text)

tmp_path = Path(tmp.name)

os.replace(tmp_path, path)

def _write_list(path: Path, data: list[dict[str, Any]]) -> None:

_atomic_write_text(path, json.dumps(data, indent=2, ensure_ascii=False))Second, we implement our concrete classes:

# JSON Book

@dataclass

class JSONFileBookRepository(BookRepository):

file_path: Pathish

def record(self, book: "Book") -> RepositoryResult:

path = Path(self.file_path)

items = _read_list(path)

exists = any(

i.get("name") == book.name and i.get("number") == book.number for i in items

)

if not exists:

items.append({"name": book.name, "number": book.number})

_write_list(path, items)

return RepositoryResult.SUCCESS

# JSON Character

@dataclass

class JSONFileCharacterRepository(CharacterRepository):

file_path: Pathish

def record(self, character: "Character") -> RepositoryResult:

path = Path(self.file_path)

items = _read_list(path)

if not any(i.get("name") == character.name for i in items):

items.append({"name": character.name})

_write_list(path, items)

return RepositoryResult.SUCCESS

# JSON Mapping

@dataclass

class JSONFileCharacterToBookMappingRepository(CharacterToBookMappingRepository):

file_path: Pathish

def record(self, book: Book, character: Character) -> RepositoryResult:

path = Path(self.file_path)

items = _read_list(path)

entry = {

"book": {"name": book.name, "number": book.number},

"character": {"name": character.name},

}

exists = any(

i.get("book", {}).get("name") == entry["book"]["name"]

and i.get("book", {}).get("number") == entry["book"]["number"]

and i.get("character", {}).get("name") == entry["character"]["name"]

for i in items

)

if not exists:

items.append(entry)

_write_list(path, items)

return RepositoryResult.SUCCESSConcrete Implementation for SQLite

Again, the first step is to set up some helpers and - because this is an actual database - define our schema:

from __future__ import annotations

from dataclasses import dataclass

import sqlite3

from os import PathLike

from discworld_cli.domain.repositories import (

BookRepository,

CharacterRepository,

CharacterToBookMappingRepository,

RepositoryResult,

)

from discworld_cli.domain.models import Book, Character

Pathish = str | PathLike[str]

SCHEMA_SQL = """

PRAGMA foreign_keys = ON;

CREATE TABLE IF NOT EXISTS books (

id INTEGER PRIMARY KEY,

name TEXT NOT NULL,

number INTEGER NOT NULL,

UNIQUE(name, number)

);

CREATE TABLE IF NOT EXISTS characters (

id INTEGER PRIMARY KEY,

name TEXT NOT NULL UNIQUE

);

CREATE TABLE IF NOT EXISTS character_book_mappings (

book_id INTEGER NOT NULL,

character_id INTEGER NOT NULL,

PRIMARY KEY (book_id, character_id),

FOREIGN KEY (book_id) REFERENCES books(id) ON DELETE CASCADE,

FOREIGN KEY (character_id) REFERENCES characters(id) ON DELETE CASCADE

);

"""

class _SQLiteRepoBase:

def __init__(self, db: Pathish | sqlite3.Connection):

if isinstance(db, sqlite3.Connection):

self.conn = db

self._owns = False

else:

self.conn = sqlite3.connect(db)

self._owns = True

self.conn.row_factory = sqlite3.Row

self.conn.executescript(SCHEMA_SQL)

self.conn.commit()

def close(self) -> None:

if self._owns:

self.conn.close()

def __enter__(self):

return self

def __exit__(self, *exc):

self.close()Second, our concrete implementations:

# SQLite Book

@dataclass

class SQLiteBookRepository(_SQLiteRepoBase, BookRepository):

db: Pathish | sqlite3.Connection

def __post_init__(self): # dataclass hook

super().__init__(self.db)

def record(self, book: "Book") -> RepositoryResult:

try:

self.conn.execute(

"INSERT OR IGNORE INTO books(name, number) VALUES (?, ?)",

(book.name, book.number),

)

self.conn.commit()

return RepositoryResult.SUCCESS

except sqlite3.Error:

return RepositoryResult.FAILED

# SQLite Character

@dataclass

class SQLiteCharacterRepository(_SQLiteRepoBase, CharacterRepository):

db: Pathish | sqlite3.Connection

def __post_init__(self):

super().__init__(self.db)

def record(self, character: "Character") -> RepositoryResult:

try:

self.conn.execute(

"INSERT OR IGNORE INTO characters(name) VALUES (?)",

(character.name,),

)

self.conn.commit()

return RepositoryResult.SUCCESS

except sqlite3.Error:

return RepositoryResult.FAILED

# SQLite Mapping

@dataclass

class SQLiteCharacterToBookMappingRepository(

_SQLiteRepoBase, CharacterToBookMappingRepository

):

db: Pathish | sqlite3.Connection

def __post_init__(self):

super().__init__(self.db)

def record(self, book: "Book", character: "Character") -> RepositoryResult:

try:

self.conn.execute(

"INSERT OR IGNORE INTO books(name, number) VALUES (?, ?)",

(book.name, book.number),

)

self.conn.execute(

"INSERT OR IGNORE INTO characters(name) VALUES (?)",

(character.name,),

)

self.conn.execute(

"""

INSERT OR IGNORE INTO character_book_mappings(book_id, character_id)

VALUES (

(SELECT id FROM books WHERE name=? AND number=?),

(SELECT id FROM characters WHERE name=?)

)

""",

(book.name, book.number, character.name),

)

self.conn.commit()

return RepositoryResult.SUCCESS

except sqlite3.Error:

return RepositoryResult.FAILEDSwitching between repositories as required

Now, that is quite a bit of code and it may look confusing at first. But it allows us to do cool things like determining the persistence backend at runtime and act accordingly.

Doing so is a two-stage process. The first involves a simple factory to set up our repositories as required:

from __future__ import annotations

import sqlite3

from dataclasses import dataclass

from pathlib import Path

from typing import Callable, Literal

from discworld_cli.domain.repositories import (

BookRepository,

CharacterRepository,

CharacterToBookMappingRepository,

)

from discworld_cli.infrastructure.json.repositories import (

JSONFileBookRepository,

JSONFileCharacterRepository,

JSONFileCharacterToBookMappingRepository,

)

from discworld_cli.infrastructure.sqlite.repositories import (

SQLiteBookRepository,

SQLiteCharacterRepository,

SQLiteCharacterToBookMappingRepository,

)

Backend = Literal["sqlite", "json"]

@dataclass

class Repositories:

books: BookRepository

characters: CharacterRepository

mappings: CharacterToBookMappingRepository

close: Callable[[], None]

def make_repositories(

*,

backend: Backend,

sqlite_path: str | Path = "app.db",

json_dir: str | Path = "data",

) -> Repositories:

if backend == "sqlite":

conn = sqlite3.connect(sqlite_path)

return Repositories(

books=SQLiteBookRepository(db=conn),

characters=SQLiteCharacterRepository(db=conn),

mappings=SQLiteCharacterToBookMappingRepository(db=conn),

close=conn.close,

)

elif backend == "json":

base = Path(json_dir)

return Repositories(

books=JSONFileBookRepository(file_path=base / "books.json"),

characters=JSONFileCharacterRepository(file_path=base / "characters.json"),

mappings=JSONFileCharacterToBookMappingRepository(

file_path=base / "mappings.json"

),

close=lambda: None,

)

else:

raise ValueError(f"Unknown backend: {backend!r}")Whilst the second is a very simple, and easy to read, catalogue service which uses the appropriate implementation at runtime:

from __future__ import annotations

from discworld_cli.domain.models import Book, Character

from discworld_cli.domain.repositories import (

BookRepository,

CharacterRepository,

CharacterToBookMappingRepository,

RepositoryResult,

)

class CatalogService:

def __init__(

self,

books: BookRepository,

characters: CharacterRepository,

mappings: CharacterToBookMappingRepository,

) -> None:

self.books = books

self.characters = characters

self.mappings = mappings

def add_book(self, name: str, number: int) -> RepositoryResult:

return self.books.record(Book(name=name, number=number))

def add_character(self, name: str) -> RepositoryResult:

return self.characters.record(Character(name=name))

def map_character(

self, book_name: str, book_number: int, character_name: str

) -> RepositoryResult:

return self.mappings.record(

Book(name=book_name, number=book_number),

Character(name=character_name),

)A Simple Typer Application to demonstrate the pattern

This is a good theory and all that, but what does it mean in practice? How could we implement it in an actually useful fashion?

Well I can’t share any of the code I’ve written for other companies that demonstrate the pattern, but I can make a silly CLI application to demonstrate the principle.

So, that’s what I did. And, considering the Death of Rats sits on my desk at all times, I decided to make a silly little Typer CLI that allows you to persist information about Discworld Characters and Books to either a JSON or SQLite backend.

It’s open-source, in source control, and available at sudoblark/sudoblark.patterns.repository-enterprise.

Have a look, have a poke, and most importantly read the README and see how this simple pattern allows swapping backends with a simple feature flag and then lament at all the refactoring you want to do.

*a perfectly acceptable state of affairs as far as I’m concerned

Part 3 of 3 in Software Odds and Ends