Written 25 July 2023 ~ 14 min read

monsternames-api

Benjamin Clark

Introduction

Many moons ago I decided to try and make a text-based, roguelite, game for the terminal. But then life got in the way, so it never really worked out.

But, from the ashes arose something useful at least. For the game I wanted to randomly generate enemies, traps, monsters etc. But, being me, I wanted each enemy to have a naming theme. The reasoning for this, and the naming themes, are now lost to my memory.

But what remains is a fully functionality RESTAPI, complete with fancy behavioural tests. But, perhaps more impressively, a third-party website that uses my RESTAPI to do something silly and fun. So, in this article I’ll go over the structure of the RESTAPI, how it’s coded, and the behavioural testing in the hope it proves useful to someone else when cross-referenced with the source code itself. If nothing else, avoid my mistakes. Salt your API keys, and don’t over-engineer things.

What I’m not going to do is provide a comprehensive tutorial on how to build a CRUD application, there’s already a plethora of better web-articles out there on how to do that.

Oh, and I’ll link the API and cool third-party website too.

Links

| Website | https://monsternames-api.com |

|---|---|

| Source | https://github.com/Sudoblark/monsternames-api |

| Third-party usage | https://monster.mnuh.org |

It is assumed, where references to files and file content is made, that the reader will have said file open within the source code to fully understand what’s being said.

Requirements

Every project starts with an idea, when then morphs into a set of requirements, and the requirements for this project are rather simple:

-

Support CRUD operations against a number of endpoints

-

Each endpoint must correspond to a monster class in the game

-

Each monster class may support one of the following naming formats:

-

First name

-

Last name

-

First name and last name

-

-

Unregistered endpoints should return a suitable error message

-

Invalid POST requests should return a helpful error message

-

Unauthorised POST requests should return a helpful error message

-

POSTing of a record which already exists should return a helpful error message

-

API key authorisation should be used for POST and DELETE requests

-

Behavioural testing should test all methods on all endpoints

-

The RESTAPI should run inside a docker container for ease of usage and deployability

Structure

With the requirements settled, I began to work (many moons ago) on this little side project. As this was the dark days before I knew of the wonders of pyproject.toml and poetry I firstly specified all of my requirements in a requirements.txt file and got to work.

The file structure is fairly standard:

| Folder/File | Purpose |

|---|---|

| .circleci | CI/CD configuration for CircleCI |

| features | To store configuration and behavioural testing with Behave! |

| images | Static images for the website |

| src | The meat and potatoes of our Python program |

| .gitignore | Self-explanatory. RTM. |

| LICENSE | First thing I make on any project. |

| dockerfile | Required to create a container for the API. |

| readme.md | Docs. I love documentation. I actually wanted to put stuff in here not just leave it empty. |

| requirements.txt | The old-fashioned way to do Python dependency management. |

Object relational mapping (ORM)

Off the bat there’s one thing I knew I didn’t want to do; hand-craft SQL. It’s not very fun, and neither is SQL injection, so I began hunting around for a suitable ORM library. For the uninitiated the premise of ORM is simple:

Create a bridge between objects in code and relational databases, such that data may be represented - and interacted with - using object-orientated programming (OOP) principles.

Given this is back before I went bald, I hadn’t heard of SQLAlchemy just yet so I settled on PeeWee as my ORM library of choice. It integrates well with Flask (we’ll get on to that) but crucially for me it was lightweight and had a simple to understand syntax.

The process from there was straight-forward enough:

-

Create object models in code to represent tables etc in the database

-

Create setup scripts:

-

One for the CI in order to setup the database with a dummy API key for behavioural tests

-

One to initialise the entire database and perform table migrations etc based off of the PeeWee models

-

So I set to work and created all of the PeeWee models with the setup as follows:

src/databasecontains all pertinent code for the ORM library-

ciSetup.py(yes it’s not snake_case, be kind I still had hair back then) creates a dummy user and API key for authenticate in the CI to locally running monsternames-api instance -

dbVars.pyreads configuration from environment variables. If I was to re-do this project, this would be the low-hanging fruit to amend with error handling, maybe even changing to read a configuration file as input instead. -

models.pyis where we define all of our relational models as objects in code -

setup.pyis used to initialise the database

-

Most of this you can read and figure out, but the cool bit is this:

# src/database/models.py

# Connect to DB

db = MySQLDatabase(dbVars.dbName, host=dbVars.dbHost, port=3306, user=dbVars.dbUser, passwd=dbVars.dbPassword)

# LIST OF DATABASE MODELS

class GoblinFirstName(peewee.Model):

firstName = peewee.CharField()

class Meta:

database = dbIt’s that simple to define fields in a table with an ORM, whilst the Meta class associates our model with whatever database we’ve connected to. By defining the connection via environment variables this lets us decouple configuration from code (always a good thing), ensuring the same code is tested in CI as will actually run in production.

Our setup.py then becomes dead simple (could be a lot simpler if I had the time to refactor):

# src/database/setup.py

...

try:

models.GoblinFirstName.create_table()

...

models.db.commit()

...And that’s it. That’s all that was needed to define my database schema and initialise it.

Flask

After setting up all of the database models the next questions I had were:

-

How do I map these to endpoints?

-

How do I serve static content?

To anwer the first, I knew I’d need some wrappers around the PeeWee models themselves. My solution to this was the monster_endpoint class in src/endpoints/monster_endpoints which - at 162 lines of code - is far too large to simply copy/paste here. The key though was to use this class as an abstraction over the PeeWee models. The idea was good, and the code still works, but looking back there’s many things I’d change with the benefit of many more years of development. Especially the API key lookup logic, which hurts me still to this day.

With a wrapper present, I needed a lightweight web application framework to serve this content up via a web server. Thankfully, Flask came to the rescue. There are endless debates over Flask vs Django, but my own personal preference is Flask. Mapping endpoints to methods in my wrapper was then a lot easier than I had imagined:

from flask import Flask

application = Flask(__name__)

...

@application.route('/api/v1.0/goblin', methods=['GET'])

@get_route

def get_goblin():

return GoblinEndpoint.return_name()

@application.route('/api/v1.0/goblin/firstName', methods=['POST'])

@monster_route

def post_goblin_first_name():

return GoblinEndpoint.insert_first_name(request)All that was needed in the end was:

-

A decorator for Flask

-

A decorator to add CORs headers (

get_route)

And it was as simple as that. The fact an ORM library was used meant that the required GET/POST methods could be handled via OOP principles as opposed to hand-crafting SQL.

Static content proved just as easy:

@application.route('/')

def home():

return render_template('home.html')Thus, I could craft some really basic HTML/JS/CSS under src/static to serve as the static web-page and I was off.

In hindsight - although I don’t think it was available at the time - I would have just used Swagger.

Docker

The docker side is where I was most comfortable, having come from a SysAdmin background before worming my way into Development.

The dockerfile is nothing special. It does everything you’d expect it too, with the entry point simply running our src/database/setup.py to initialise the database before running flask to act as the web server.

Behavioural Testing

With the base API in place, I wanted to know if it worked. Way back then I didn’t know about the wonders of PyTest and MonkeyPatch, but I did know about Cucumber for end-to-end behavioural testing of Infrastructure.

And I knew that Python had its own flavour of Cucumber in the form of Behave!. So I got cracking with the basic setup as follows:

- The

featuresfolder contains the root of all behavioureal testing in the project-

.featurefiles provide a plain-english test definition, allowing us to outline any number of Scenarios and test cases -

environment.pydefines the environmental needs for each test -

steps/steps.pyimplements the actual logic of the tests

-

And while that may seem like a lot, it’s dead easy.

environment.py simply ensures our tests use the same API key as set in our CI setup script in our ORM implementation, and sets the query URL to a locally running instance of our app:

def before_all(context):

context.base_url = "http://localhost:5000"

context.api_key = "helloworld"

api.feature defines tests for every single endpoint. There’s a lot, so lets just hone in on the tests for goatmen:

Feature: API functionality

Scenario Outline: /api/v1.0/goatmen

Given a <field> of <field_value>

Then I should be able to POST to <post_endpoint>

And GET to <get_endpoint> will contain <return_fields>

Examples:

| field | field_value | post_endpoint | get_endpoint | return_fields |

| firstName | Fluffy | /api/v1.0/goatmen/firstName | /api/v1.0/goatmen | fullName,firstName |

| firstName | Squiggles | /api/v1.0/goatmen/firstName | /api/v1.0/goatmen | fullName,firstName |

| firstName | Flopsy | /api/v1.0/goatmen/firstName | /api/v1.0/goatmen | fullName,firstName |

| firstName | Bugsy | /api/v1.0/goatmen/firstName | /api/v1.0/goatmen | fullName,firstName |

| firstName | Tooty | /api/v1.0/goatmen/firstName | /api/v1.0/goatmen | fullName,firstName |Whilst steps/steps.py provides all the basic logic we need:

from requests import post, get

from behave import *

from asserts import assert_equal

@given("a {field} of {field_value}")

def step_imp(context, field, field_value):

context.data = {field: field_value}

@then("I should be able to POST to {post_endpoint}")

def step_imp(context, post_endpoint):

req = post(context.base_url + post_endpoint, data=context.data, headers={"x-api-key": context.api_key})

assert_equal(req.status_code, 200)

@then("GET to {get_endpoint} will contain {return_fields}")

def step_imp(context, get_endpoint, return_fields):

req = get(context.base_url + get_endpoint)

response = req.json()

for field in return_fields.split(","):

assert_equal(

field in response,

True

)The result is that by simply running python3 -m behave we execute 50 separate tests in less than 1 seconds, thoroughly testing every endpoint in the RESTAPI with a plethora of data. If you examine the api.feature file you can see, clearly and concisely, exact what is tested, how, and with what data. Whilst testing new endpoints, or expanding test data, is as simple as writing more plain-text tables or table rows.

Whilst this is an old project, the test-suite is perhaps the thing I think has aged the finest and still holds as an example of how to do basic API end-to-end testing.

CI/CD

The CI/CD for this particular project is sparse. I can’t remember why I didn’t test the code on every commit but I didn’t. That’s certainly not an example to follow.

What I did setup though was a push and tag to AWS ECR on every merge to master which is worth examining in its simplicity. I’ve since implemented similar workflows, albeit more mature, across various CI platforms and the general pattern is sound:

-

Authenticate to the remote destination

-

Build the thing

-

Push the thing

In a maturer setup you’ll want a test step between the build and push steps, otherwise how do you have confidence the thing actually works?

For ECR the logic is quite simple and I don’t think it needs much explaining:

- run:

name: Login to Prod ECR

command: |

set -eo pipefail

aws configure set aws_access_key_id $PROD_AWS_ACCESS_KEY_ID --profile default

aws configure set aws_secret_access_key $PROD_AWS_SECRET_ACCESS_KEY --profile default

aws configure set default.region $AWS_REGION --profile default

aws ecr get-login-password --region $AWS_REGION --profile default | docker login --username AWS --password-stdin $PROD_ACCOUNT_ID.dkr.ecr.$AWS_REGION.amazonaws.com

- run:

name: Build image

command: |

docker build -t monsternames . --build-arg db_host="$DB_HOST" --build-arg db_name="$DB_NAME" --build-arg db_user="$DB_USER" --build-arg db_pwd="$DB_PWD" --build-arg web_host="$WEB_HOST"

- run:

name: Tag with circleCI tag and push

command: |

docker tag monsternames:latest $PROD_ACCOUNT_ID.dkr.ecr.$AWS_REGION.amazonaws.com/monsternames:${CIRCLE_SHA1:0:7}

docker push $PROD_ACCOUNT_ID.dkr.ecr.$AWS_REGION.amazonaws.com/monsternames:${CIRCLE_SHA1:0:7}

The ${} variables are provided by setting secret variables within the CircleCI GUI. A more mature setup would just store AWS keys in the CI and pull the rest from whatever flavour of parameter store you fancy, AWS Param Store, Azure Vault etc.

Other than that, we just tag and push the image using the SHA1 hash of the commit such that we may reference it later on.

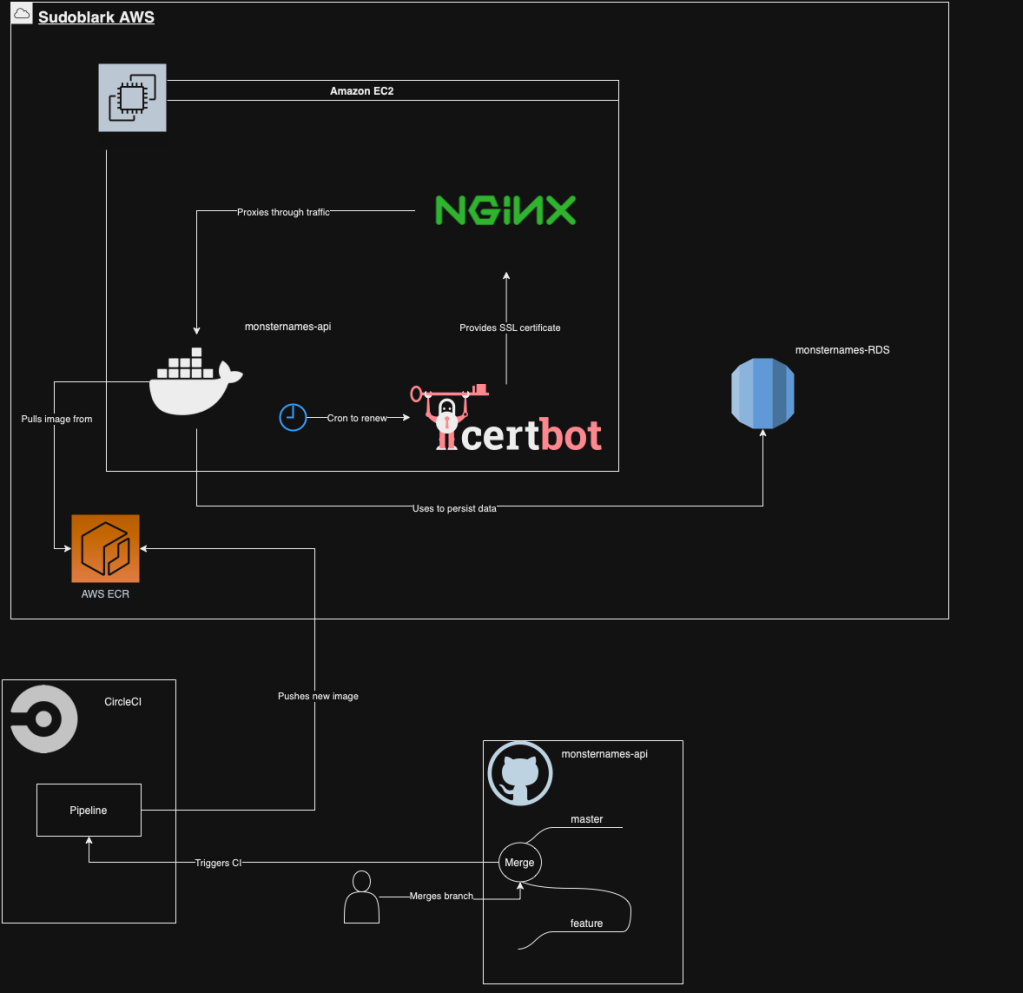

Infrastructure

Well, given we’ve got a working RESTAPI we need to deploy it. Otherwise, how can people get silly names for their ogres?

For a silly dummy project like this, I wanted minimal cost so I deployed to an EC2 at the cheapest tier I could find. But a more enterprise solution would use Kubernetes, AWS ECS/Fargate, Azure Logic Apps etc. But what we do have is quite simple:

-

An EC2 instance running in AWS on a public subnet and elastic IP

-

A security group limiting world access to the EC2 to 80/443 only

-

An RDS on a private subnet

-

A security group limiting the RDS to just be accessible from the EC2

Nginx

Nginx acts as a proxy, redirecting 80 to 443 and directing 443 to our local app as follows.

- SSL setup via

openssl req -x509 -newkey rsa:4096 -nodes -out cert.pem -keyout key.pem -days 365/etc/nginx/sites-enabled/monsternames_apiacting as our sites config:

server {

listen 443 ssl;

server_name SERVERNAME;

ssl_certificate /path/to/cert.key;

ssl_certificate_key /path/to/key.pem;

location / {

proxy_pass http://127.0.0.1:8000;

proxy_set_header Host $host;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

}

}

server {

listen 80;

server_name SERVERNAME;

location / {

return 301 https://$host$request_uri;

}

}- Finally, the default site is unlinked so that monsternames-api is now the default site for traffic:

unlink /etc/nginx/sites-enabled/defaultRunning the service

Before it was containerised, I used a complicated supervisor setup.

Since it was containerised, it’s as simple as running the container with a restart policy of Always and it’s worked ever since.

Would I recommend this for production? Absolutely not. Did I do this 3 years ago as a side project? Yes.

SSL Certificates

To ensure automatic certificate generation, certbot is used. This tool automatically generates, and renews, SSL certificates using LetsEncrypt as the root certificate authority.

The setup is surprisingly simple:

-

Add the certbot package repository:

add-apt-repository ppa:certbot/certbot -

Install certbot:

apt-get install python-certbot-nginx -

Generate a certificate:

certbot --nginx -d monsternames-api.com- This amends SSL directives in nginx to be managed by certbot

-

Setup a cron job so this automatically renews without issue.

crontab -e

0 12 * * * /usr/bin/certbot renew --quietWith that we’ve got automatic SSL certificate generation, forever, for free.

This was first setup in July 2020, and since then this has happily ticked along without issue. No SSL renewal headaches, no cross-referencing spreadsheets, no chasing finance for invoicing or anything.

Diagram

The above was quite wordy, so perhaps a diagram will better show the setup:

As you can see it’s simplistic, and for a cheap open source project not too bad. Sure it’s not a multi-region Kubernetes cluster, but I like to get out the house on the weekends.

Example Usage

So with all that said, I promised some fun example usage of API. After all, it’s all good and well making this thing but what’s the point if it’s not used?

Thankfully, it is used by https://monster.mnuh.org which uses my API to generate names, another API to generate hideous monster images and a third to grab quotes from the Simpsons… then smashes them all together to make a monster card.

Have a play yourself! Personally, the favourite one I’ve generated in a while is Fat Jonny Punching:

Conclusion

Fat Johnny Punching aside, I hope this has been a somewhat informative rambling about an old open source project of mine. It needs many improvements, but there’s a few diamonds in the rough and hopefully you learned someone new, or got some kind of inspiration, from reading my ramblings.