Written 14 March 2025 ~ 17 min read

Terraform with Data Structures and Algorithms

Benjamin Clark

Part 1 of 2 in Sudoblark Best Practices

Introduction

Hello all.

Recently, I’ve been doing a few technical talks at various places. And it got me thinking that maybe some of the topics I’m covering would also be useful as a blog post, such that attendees can listen to read my mad ramblings at their own pace.

So, here we are. Perhaps the first of many such blog posts.

The problem

-

Infrastructure-as-Code (IaC) allows programmatic, declarative, defining of our Infrastructure.

-

In many organisations, this is handled by a DevOps or Cloud function which may or may not have a computer science or programmatic background.

-

Terraform is used as a “wishlist” of resource, ending up with Terraform files that are 1000s of lines long with copy/pasted resource blocks everywhere.

-

Thus, we lose perhaps Terraform’s biggest benefit: the single source of truth.

-

We also lose the features of a programmatic language, whilst gaining all the baggage. Extensibilifty, multiplicity and inherence and replaced by closely-coupled code, repeated blocks of code, and the same “plumbing” repeated over and over again.

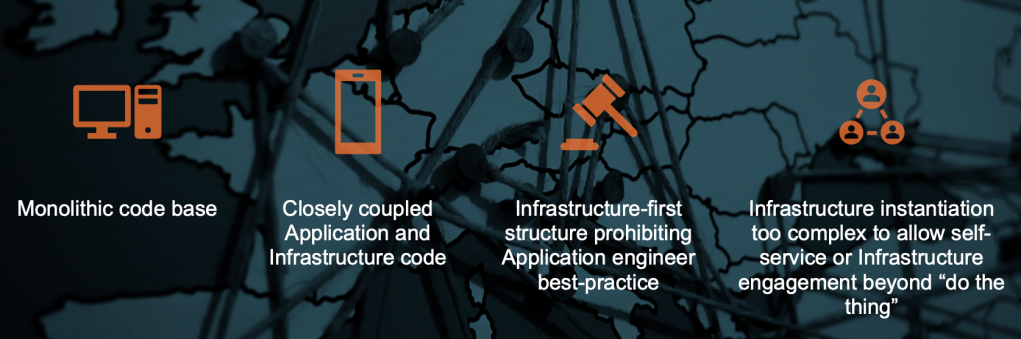

These problems often manifest in the same four pain points I’ve seen over and over again in my career:

This also fails to realise a fundamental truth:

Infrastructure is only useful when it fulfils a business need, that need is often encapsulated in some form of application code, and business needs - as we all know - are liable to change on a whim

The impact

The impact of such technical problems manifest in a myriad of truly frustrating ways, such as:

-

Ineffective and long onboarding as team members need to get to grips with “the big bad” codebase that drives everything the team does.

-

Application engineers are unable to perform at their best due to the complex manner in which their code is deployed in to an environment

-

An Infrastructure-first approach prohibits application best practice such as unit tests, linting, and regression testing

-

Closely coupled, monolithic, codebases can reduce release cadence to a crawl and prevent parallel development of features

-

Infrastructure is created by the Infrastructure team… which inherently do not understand the application code being deployed as well as the application engineers who actually wrote it

-

Bad documentation: a “big bad” monolithic repo is really, really, really hard to document

A path forward

Reviewing such problems, and the impact they may have, we may ask “well that’s all good and well, but we need this code!”.

Do you?

What is often needed is a way to provision infrastructure quickly, effectively, and with absolute clarity at all times about:

-

What is being deployed

-

Why it’s being deployed

That may seem self-evident, but it’s the first hurdle and many teams fall at it. I’ve provided consultancy services in many organisations, and the simple question of “what are you building, and why?” is often a hard one to answer. But it shouldn’t be.

The actual requirements

With these questions - and the usual pitfalls - in mind, we may come up with a comprehensive set of requirements to work towards for our Terraform

-

Application and Infrastructure code decoupled at rest

-

Allow application engineers to follow their own best-practice, and Infrastructure engineers the same, provided a common interface is shared

-

Modularise Terraform to allow for self-service

Note that this is just one-part of the puzzle. Other things, such as comprehensive READMEs, standardised deployment patterns, and release engineering as a proper discipline are all required to get the most value out of this working pattern. But we don’t have the space to cover that here.

Repository structure

Modular Terraform for the user

For the user, Terraform should be like any other software package. Namely, if they adhere to the known, public, interface - and fulfil its contextual dependencies - they should be able to perform CRUD operations on any number of resources they like.

If you’re unfamiliar with CRUD, it’s a well known set of operations that provide the building blocks to create more complex functionality. codeacademy does a pretty article on it here

The public interface we shall define for users will be that of complex data structures. This usually will be a matrix, or in Terraform parlance a list of maps of objects. Thus, we may perform the usual matrix operations - mutation, removal, concatenation etc - but within the context of Infrastructure management.

Crucially, knowledge of Terraform may be required to create such modules but it is not required to utilise them. An Infrastructure engineer may create a module, instantiate it in a repo, and walk away… and from then on the application engineer can create whatever Infrastructure they require to their hearts content.

We shall break down what each requirement means for the user in turn.

Known public interface

The interface for the module(s) you are using should be well-known, documented, and with absolute clarity at all times on:

-

What arguments the data structure takes, and their permissible values

-

Outputs, in any, of the module

-

What resource(s) the module creates for you

-

Constraints on module usage

In essence, like any other piece of software users’ interact with these modules should have an enforced contract. As well as being well-known, the interface should make explicit:

-

Contextual dependencies

-

Constraints

-

Inputs

-

Outputs

If you adhere to the public interface, and fulfil the contextual dependencies, you should be able to create, update or destroy any number of resources you like. It’s that simple.

Data structures

If you are interacting within the existing guardrails of the platform - namely, you are adhering to the contract - interaction should merely require amendment of data structures.

Your extract-transform-load (ETL) process suddenly needs 3 times the number of lambdas? Add dictionaries to the matrix defining them.

Missed a resource for an IAM policy for your lambda? Mutate the data structure.

No longer need that db.m7i.48xlarge RDS instance as the persistent storage for your Wordpress instance (hint: you never did anyway), remove it from the data structure and make finance happy.

Knowledge of Terraform

Here’s the TL;DR for the user: no knowledge of Terraform is required to create or change Infrastructure.

Modular Terraform for the developer

For the developer, we define more stringent requirements related to how these modules are actually created:

-

Each module requires a well-defined, documented, public interface outlining requirements, optionals, constraints, and dependencies.

-

Data structures utilised must be a collection of some form to allow for multiplicity.

-

Data structures must be mutable to allow changing of existing Infrastructure.

-

Modules must use a unified index for readability and cross-referencing.

-

The code must still be elegant under the hood.

We shall break down each requirement in turn.

Well-defined interface

An interface is useless if it is not known.

This may take many forms, the simplest is in a multi-line string comment in your module’s variables.tf file. For example in sudoblark.terraform.modules.s3-files:

Data structure

---------------

A list of dictionaries, where each dictionary has the following attributes:

REQUIRED

---------

- name: : Friendly name used through Terraform for instantiation and cross-referencing of resources,

only relates to resource naming within the module.

- source_folder : Which folder where the {source_file} lives.

- source_file : The path under {source_folder} corresponding to the file to upload.

- destination_key : Key in S3 bucket to upload to.

- destination_bucket : The S3 bucket to upload the {source_file} to.

OPTIONAL

---------

- template_input : A dictionary of variable input for the template file needed for instantiation (leave blank if no template required)A perhaps more indexable (if that’s a word) way of doing this, is to use a tool such as mkdocs to essentially mimic the hashicorp registry in terms of documentation.

The choice is really up to you.

Data structures

Mutable collections in Terraform have many types, such as:

-

List

-

Object

The simplest I’ve found in practice is a list of objects, passed in via a single variable in to our module.

For example in sudoblark.terraform.modules.s3-files:

variable "raw_s3_files" {

description = <<EOT

Data structure

---------------

A list of dictionaries, where each dictionary has the following attributes:

REQUIRED

---------

- name: : Friendly name used through Terraform for instantiation and cross-referencing of resources,

only relates to resource naming within the module.

- source_folder : Which folder where the {source_file} lives.

- source_file : The path under {source_folder} corresponding to the file to upload.

- destination_key : Key in S3 bucket to upload to.

- destination_bucket : The S3 bucket to upload the {source_file} to.

OPTIONAL

---------

- template_input : A dictionary of variable input for the template file needed for instantiation (leave blank if no template required)

EOT

type = list(

object({

name = string,

source_folder = string,

source_file = string,

destination_key = string,

destination_bucket = string,

template_input = optional(map(string), {})

})

)

}Unified index

A unified index is required for both:

-

Readability

-

Cross-referencing of resources

What do I mean by this? If we take a basic module - such as sudoblark.terraform.module.aws.state-machine - and look at how it’s creating state machines:

locals {

actual_state_machines = {

for state_machine in var.raw_state_machines :

state_machine.suffix => merge(state_machine, {

state_machine_definition = templatefile(state_machine.template_file, state_machine.template_input)

policy_json = data.aws_iam_policy_document.attached_policies[state_machine.suffix].json

state_machine_name = format("%s-%s-%s-stepfunction", var.environment, var.application_name, state_machine.suffix)

})

}

}

module "step_function_state_machine" {

for_each = local.actual_state_machines

depends_on = [

data.aws_iam_policy_document.attached_policies

]

source = "terraform-aws-modules/step-functions/aws"

version = "4.2.0"

name = each.value["state_machine_name"]

create_role = true

policy_jsons = [each.value["policy_json"]]

definition = each.value["state_machine_definition"]

logging_configuration = {

"include_execution_data" = true

"level" = "ALL"

}

cloudwatch_log_group_name = "/aws/vendedlogs/states/${each.value["state_machine_name"]}"

cloudwatch_log_group_retention_in_days = each.value["cloudwatch_retention"]

service_integrations = {

stepfunction_Sync = {

# Set to true to use the default events (otherwise, set this to a list of ARNs; see the docs linked in locals.tf

# for more information). Without events permissions, you will get an error similar to this:

# Error: AccessDeniedException: 'arn:aws:iam::xxxx:role/step-functions-role' is not authorized to

# create managed-rule

events = true

}

}

}We can see that each instance of module.step_function_state_machine uses each.value["suffix"] as it’s index.

We can also see that each state machine has a reference to data.aws_iam_policy_document.attached_policies[state_machine.suffix].json within its attributes.

Modules, by necessity (and in order to be actually useful), abstract away the interlinked complexities of Terraform into a singular data structure for consumption by a user. But we still need to make all of those individual resources ourself for the module to actually function, and those resources will have relations to each that need to be maintained in order to actually function.

By using data that is either:

-

Available natively in the data which the user provides

-

Inferred from data that the user provides

We may create a singular, unique, key for each conceptual resource that needs to be made. Under the hood, we then use that key when creating resources to ensure they’re all grouped, conceptually, by the same item the user wishes to make. This makes it readable in state, such that it’s abundantly clear what conceptual unit a resource belongs to.

This also makes it usable in Terraform, and underpins this whole pattern. By using such an index, we can preserve relations between resources during implementation to achieve the behaviour we want; just look at the example above, or any of the terraform modules available on Sudoblark’s GitHub .

It’s also why this pattern only applies to Terraform 0.12 or later, as this introduced iterator constructs. Prior to this, we had to use integer indexing… which is totally incompatible with this pattern.

Elegance

It’s all good and well giving the user a clean, well-defined, interface for consumption… but the underlying implementation code still needs to be elegant.

Some poor sod (probably you) might need to add a feature to it in six months, or fix a bug, or hand it to a new hire. If the implementation code isn’t readable, well-documented, or clean… good luck with that.

Thankfully, this pattern actually makes it simpler than others to have elegant code under the hood. Lets go back to the sudoblark.terraform.module.aws.state-machine example and break it down.

variables.tf

Here we define our well-known interface and contextual dependencies, along with input validation.

# Input variable definitions

variable "environment" {

description = "Which environment this is being instantiated in."

type = string

validation {

condition = contains(["dev", "test", "prod"], var.environment)

error_message = "Must be either dev, test or prod"

}

}

variable "application_name" {

description = "Name of the application utilising resource."

type = string

}

variable "raw_state_machines" {

description = <<EOF

Data structure

---------------

A list of dictionaries, where each dictionary has the following attributes:

REQUIRED

---------

- template_file : File path which this machine corresponds to

- template_input : A dictionary of key/value pairs, outlining in detail the inputs needed for a template to be instantiated

- suffix : Friendly name for the state function

- iam_policy_statements : A list of dictionaries where each dictionary is an IAM statement defining glue job permissions

-- Each dictionary in this list must define the following attributes:

--- sid: Friendly name for the policy, no spaces or special characters allowed

--- actions: A list of IAM actions the state machine is allowed to perform

--- resources: Which resource(s) the state machine may perform the above actions against

--- conditions : An OPTIONAL list of dictionaries, which each defines:

---- test : Test condition for limiting the action

---- variable : Value to test

---- values : A list of strings, denoting what to test for

OPTIONAL

---------

- cloudwatch_retention : How many days logs should be retained for in Cloudwatch, defaults to 90

EOF

type = list(

object({

template_file = string,

template_input = map(string),

suffix = string,

iam_policy_statements = list(

object({

sid = string,

actions = list(string),

resources = list(string),

conditions = optional(list(

object({

test : string,

variable : string,

values = list(string)

})

), [])

})

),

cloudwatch_retention = optional(number, 90)

})

)

validation {

condition = alltrue([

for state_machine in var.raw_state_machines : (tonumber(state_machine.cloudwatch_retention) >= 0)

])

error_message = "cloudwatch_retention for each state machine should be a valid integer greater than or equal to 0"

}

}common_iam_policies.tf

Here we define iam policies that we expect to be common across all state machines, for ease of usage.

locals {

barebones_statemachine_statements = [

{

sid = "BarebonesEventActionsForStatemachine"

actions = [

"events:PutEvents",

"events:DescribeRule",

"events:PutRule",

"events:PutTargets"

]

resources = [

"arn:aws:events:${data.aws_region.current_region.name}:${data.aws_caller_identity.current_account.account_id}:rule/default/StepFunctionsGetEventsForECSTaskRule",

"arn:aws:events:${data.aws_region.current_region.name}:${data.aws_caller_identity.current_account.account_id}:rule/StepFunctionsGetEventsForECSTaskRule",

"arn:aws:events:${data.aws_region.current_region.name}:${data.aws_caller_identity.current_account.account_id}:event-bus/default"

]

conditions = []

}

]

}aws_iam_policy_document.tf

Here we simply concatanate the user supplied iam policies, with the barebones policy we defined earlier as well as a dynamically generated policy to allow the state machine access to its own logs.

Notice the use of the suffix for indexing, adhering to the unified index principle mentioned earlier.

locals {

actual_iam_policy_documents = {

for state_machine in var.raw_state_machines :

state_machine.suffix => {

statements = concat(state_machine.iam_policy_statements, local.barebones_statemachine_statements,

[

{

sid = "ListOwnExecutions",

actions = [

"states:ListExecutions"

]

resources = [

format(

"arn:aws:states:%s:%s:stateMachine:%s-%s-%s-stepfunction",

lower(data.aws_region.current_region.name),

lower(data.aws_caller_identity.current_account.id),

lower(var.environment),

lower(var.application_name),

lower(state_machine.suffix)

)

]

conditions = []

},

{

sid = "AllowCloudwatchStreamAccess",

actions = [

"logs:CreateLogStream",

"logs:PutLogEvents"

],

resources = [

"arn:aws:logs:${data.aws_region.current_region.name}:${data.aws_caller_identity.current_account.account_id}:log-group:/aws/stepfunction/${format("%s-%s-%s-stepfunction", var.environment, var.application_name, state_machine.suffix)}",

"arn:aws:logs:${data.aws_region.current_region.name}:${data.aws_caller_identity.current_account.account_id}:log-group:/aws/stepfunction/${format("%s-%s-%s-stepfunction", var.environment, var.application_name, state_machine.suffix)}:*"

]

conditions = []

},

{

sid = "AllowCloudwatchLogDelivery",

actions = [

"logs:CreateLogDelivery",

"logs:PutResourcePolicy",

"logs:UpdateLogDelivery",

"logs:DeleteLogDelivery",

"logs:DescribeResourcePolicies",

"logs:GetLogDelivery",

"logs:ListLogDeliveries",

"logs:DescribeLogGroups"

],

resources = ["*"]

conditions = []

}

]

)

}

}

}

data "aws_iam_policy_document" "attached_policies" {

for_each = local.actual_iam_policy_documents

dynamic "statement" {

for_each = each.value["statements"]

content {

sid = statement.value["sid"]

actions = statement.value["actions"]

resources = statement.value["resources"]

dynamic "condition" {

for_each = statement.value["conditions"]

content {

test = condition.value["test"]

variable = condition.value["variable"]

values = condition.value["values"]

}

}

}

}state_machine.tf

Here we cobble together:

-

A

templatefilefor the state machine JSON, allowing us to provide generalised JSON files to define our state machines but then pass in inputs at runtime to determine variable input. For example, if you need to run a “determine-date-partition” lambda in your state machine - but you’re instantiating that in three different AWS accounts - this allows us to lookup the ARN at runtime and supply it to the module to then use in the terraform templating engine. -

A json representation of our previously generated policy for the state machine in

aws_iam_policy_document.tf- again notice that by usingsuffix, which is a known attribute, we can lookup values pertinent to each iteration without cross-contamination of indivdual elements.

And then use this to actually create n state machines.

locals {

actual_state_machines = {

for state_machine in var.raw_state_machines :

state_machine.suffix => merge(state_machine, {

state_machine_definition = templatefile(state_machine.template_file, state_machine.template_input)

policy_json = data.aws_iam_policy_document.attached_policies[state_machine.suffix].json

state_machine_name = format("%s-%s-%s-stepfunction", var.environment, var.application_name, state_machine.suffix)

})

}

}

module "step_function_state_machine" {

for_each = local.actual_state_machines

depends_on = [

data.aws_iam_policy_document.attached_policies

]

source = "terraform-aws-modules/step-functions/aws"

version = "4.2.0"

name = each.value["state_machine_name"]

create_role = true

policy_jsons = [each.value["policy_json"]]

definition = each.value["state_machine_definition"]

logging_configuration = {

"include_execution_data" = true

"level" = "ALL"

}

cloudwatch_log_group_name = "/aws/vendedlogs/states/${each.value["state_machine_name"]}"

cloudwatch_log_group_retention_in_days = each.value["cloudwatch_retention"]

service_integrations = {

stepfunction_Sync = {

# Set to true to use the default events (otherwise, set this to a list of ARNs; see the docs linked in locals.tf

# for more information). Without events permissions, you will get an error similar to this:

# Error: AccessDeniedException: 'arn:aws:iam::xxxx:role/step-functions-role' is not authorized to

# create managed-rule

events = true

}

}

}If we were to, for example, instead create 100 state machines manually… trying to maintain such a codebase would be a mess.

Here, we split files by concern and use simplistic iteration to achieve an elegant codebase which - hopefully - makes sense to the implementor six months after the fact when they invariably get asked to extend it.

Implementing architectures

With the pattern well-established, we may examine some common use-case architectures which facilitate its usage.

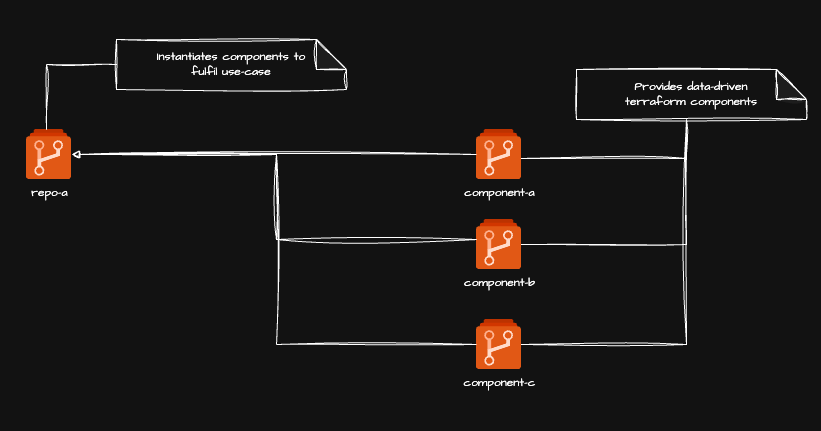

Re-usable Terraform components used to fulfil a use-case

Purpose

To provide a platform of re-usable, data-driven, semantically versioned Terraform components. Said components may then be utilised in downstream repositories as building blocks in order to fulfil specific use-cases.

How it works

-

Components A, B and C are all individual repositories

-

Each of these repositories define a singular Terraform module which allows usage of a singular data structure for performance of CRUD operations against a conceptual terraform resource

-

Repo A is a separate repository

-

This repository instantiates Components A, B and C in the manner required to fulfil its own use-case(s)

Examples

-

https://github.com/sudoblark/sudoblark.terraform.modularised-demo is a demo repository which performs basic ETL operations, it instantiates numerous Terraform modules within Sudoblark in order to fulfil its specific use-case.

-

https://github.com/sudoblark/sudoblark.monsternames.api is a live RESTAPI on the web, which instantiates numerous Terraform modules within Sudoblark in order to fulfil its specific use-case

Mono-repo used to manage a singular product

Purpose

To provide a singular, data-driven, repository which allows management of a singular product.

Its purpose is to maintain a single source of truth, and the benefits of the data-driven Terraform pattern, within the constraints of management for a singular product.

How it works

The mono-repo has a singular locals.tf entrypoint, which defines all require user inputs. These are then used to generate an iterative structure, which is then used to instantiate a localised module to perform actions against the product required.

Examples

- https://github.com/sudoblark/sudoblark.terraform.github is an example mono-repo to manage the entirety of GitHub for Sudoblark. It follows the data-driven Terraform principles within the constraints of needing to manage a singular product.

Part 1 of 2 in Sudoblark Best Practices